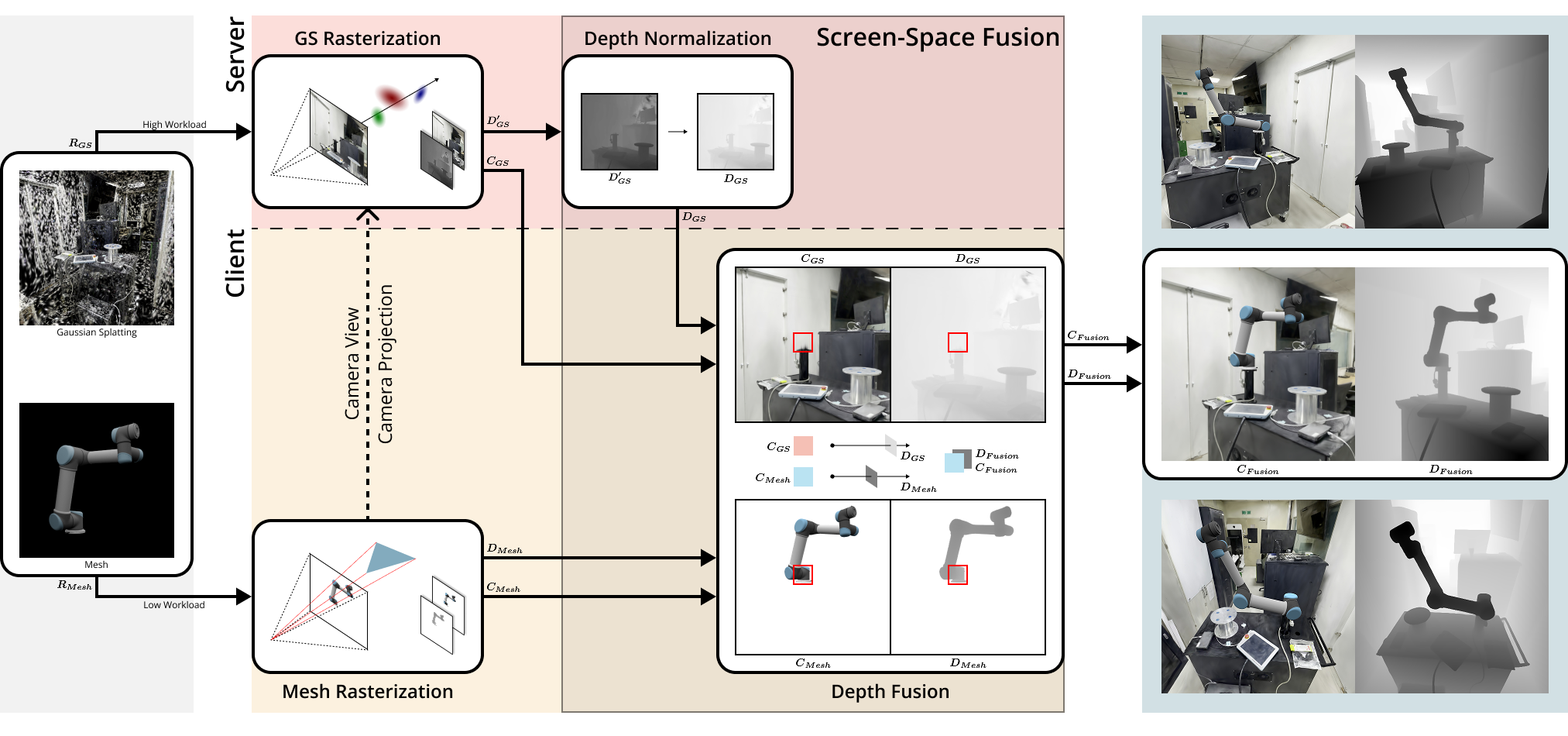

Neural rendering has recently emerged as a powerful technique for generating photorealistic 3D content, enabling high levels of visual fidelity. In parallel, web technologies such as WebGL and WebGPU support platform-independent, in-browser rendering, allowing broad accessibility without the need for platform-specific installations. The convergence of these two advancements opens up new possibilities for delivering immersive, high-fidelity 3D experiences directly through the web. However, achieving such integration remains challenging due to strict real-time performance requirements and limited client-side resources. To address this, we propose a hybrid rendering framework that offloads high-fidelity 3D Gaussian Splatting(3DGS)/4D Gaussian Splatting(4DGS) processing to a server, while delegating lightweight mesh rendering and final image composition to the client via depth-based screen-space fusion. This architecture ensures consistent performance across heterogeneous devices, reduces client-side memory usage, and decouples rendering logic from the client, allowing seamless integration of evolving neural models without frequent re-engineering. Empirical evaluations show that the proposed hybrid architecture reduces the computational burden on client devices while consistently maintaining performance across diverse platforms. These results highlight the potential of our framework as a practical solution for accessible, high-quality neural rendering across diverse web platforms.

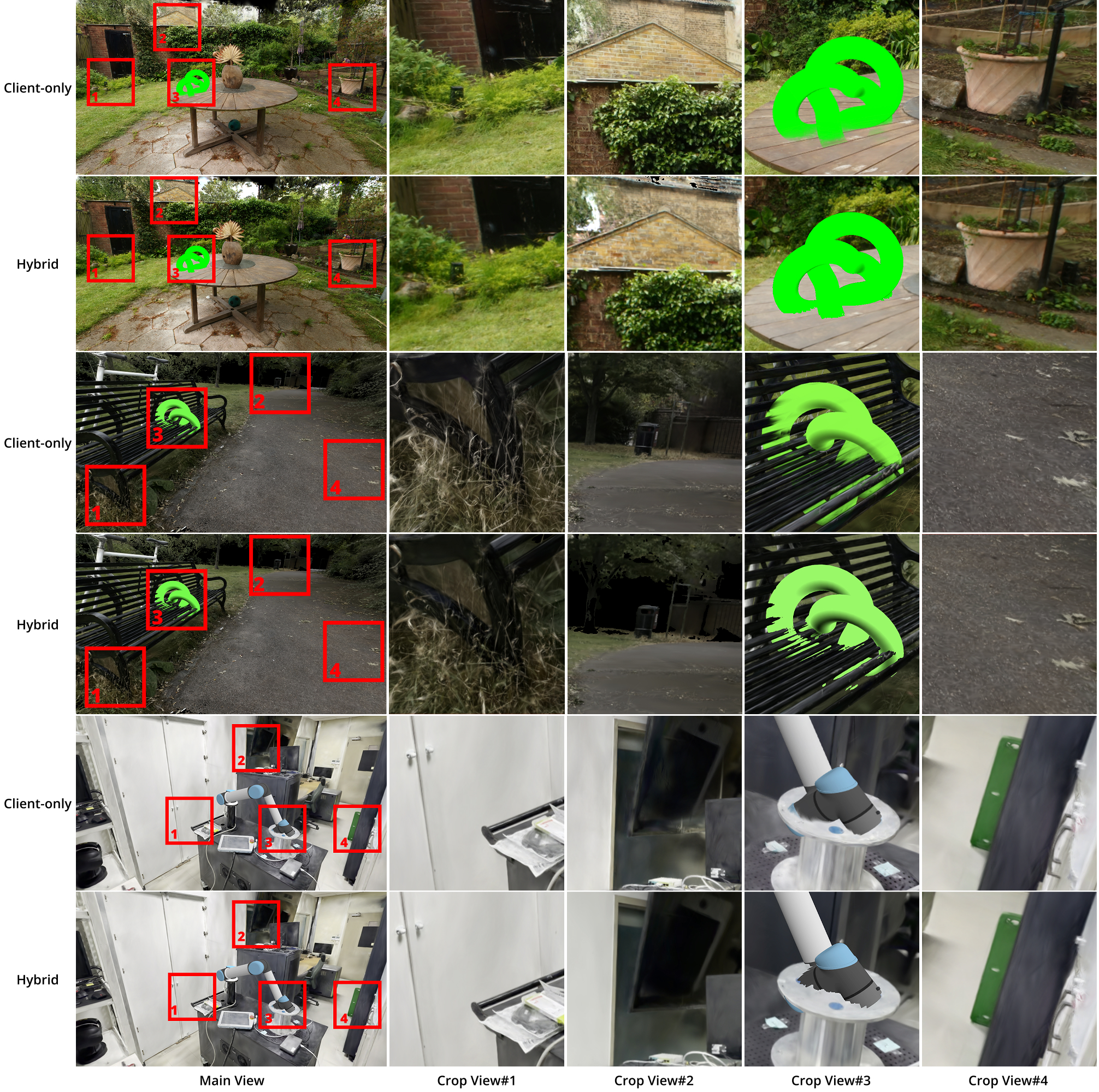

We evaluated the visual fidelity of two rendering pipelines: the client-only pipeline and our proposed hybrid pipeline. The client-only approach preserves finer image details due to the absence of network compression, which was particularly evident in scenes with complex textures, such as the Garden environment. However, it often suffers from WebGL's inherent limitations, including depth-sorting inaccuracies and memory constraints, resulting in visual artifacts like z-fighting and distorted layering, especially noticeable in the Lab scene.

In contrast, our hybrid pipeline introduces JPEG compression to facilitate efficient network streaming, resulting in slight reductions in visual detail. Nevertheless, it delivers more stable and artifact-free rendering, particularly in scenes with intricate geometry and spatial interactions. Additionally, it effectively manages client-side resource constraints, ensuring consistent rendering performance even on devices with limited VRAM capacity, where the client-only pipeline fails to load complex scenes entirely.

Thus, while the client-only pipeline excels in preserving raw visual detail, our hybrid pipeline provides superior robustness and resource efficiency, offering a balanced solution for web-based neural rendering applications.

We compare two compression methods for streaming neural-rendered content: JPEG-based streaming and H.264-based streaming. In the JPEG-based approach, color images are compressed with JPEG while depth data is streamed uncompressed as 16-bit floating-point values, achieving high spatial accuracy at the cost of increased bandwidth and reduced frame rates. In contrast, the H.264-based approach compresses both color and depth images using hardware-accelerated H.264 encoding, significantly improving frame rates and bandwidth efficiency. However, the depth data undergoes nonlinear quantization into 8-bit grayscale images, introducing slight inaccuracies in the depth-based fusion. Thus, JPEG streaming is preferred for applications demanding high spatial precision, while H.264 streaming offers superior performance in interactive, real-time scenarios.

@inproceedings{park2025streamsplat,

author = {Sehyeon Park and Yechan Yang and Myeongseong Kim and Byounghyun Yoo},

title = {StreamSplat: A Hybrid Client\textendash Server Architecture for Neural Graphics Using Depth\hyp based Fusion on the Web},

booktitle = {Proceedings of the 30th International Conference on 3D Web Technology (Web3D~'25)},

series = {Web3D~'25},

year = {2025},

pages = {1--10},

numpages = {10},

location = {Siena, Italy},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

doi = {10.1145/3746237.3746316},

url = {https://doi.org/10.1145/3746237.3746316},

keywords = {neural graphics, depth fusion, web streaming, client-server}

}